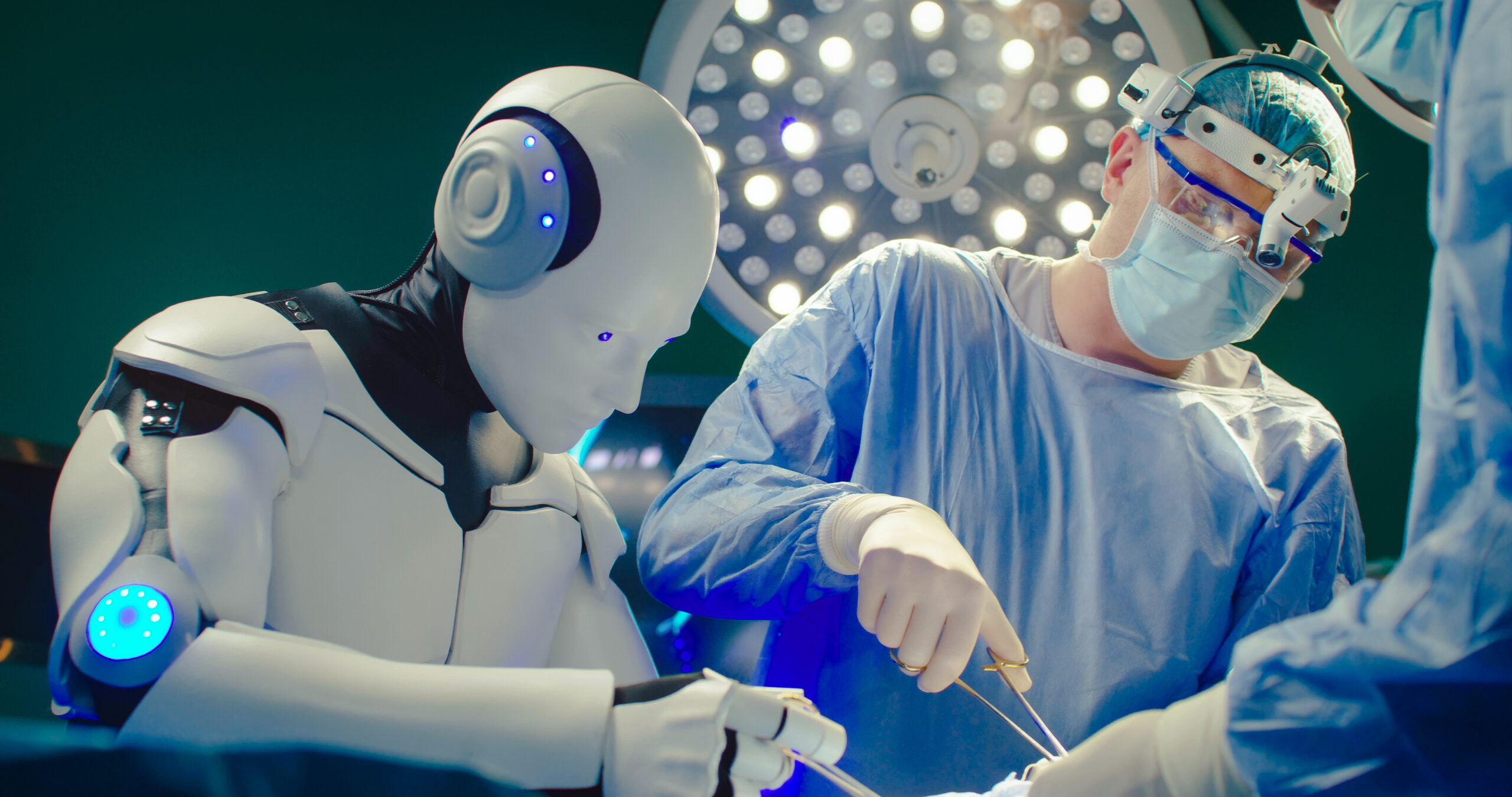

A recent study has highlighted a troubling trend: individuals are more inclined to trust medical advice from artificial intelligence than from qualified medical professionals, even when AI-generated information is often inaccurate. The research, conducted by scholars from the Massachusetts Institute of Technology and published in the New England Journal of Medicine, involved 300 participants assessing medical responses from various sources, including medical doctors, online health platforms, and AI models such as ChatGPT.

Participants, comprising both experts and laypeople, rated the AI-produced responses as more accurate, valid, trustworthy, and complete than those provided by human doctors. Notably, neither group could reliably distinguish between the AI-generated advice and that of medical professionals.

In addition, the study presented participants with AI-generated medical guidance that had been identified as low in accuracy. Despite this, participants perceived these responses as valid and trustworthy. Alarmingly, many indicated a strong likelihood of following potentially harmful medical advice, which could lead to unnecessary medical interventions.

The implications of such preferences are significant. There are numerous documented incidents where AI has given dangerous medical recommendations. For example, a case involving a 35-year-old man in Morocco required him to visit the emergency room after a chatbot suggested wrapping rubber bands around his hemorrhoid. In another case, a 60-year-old man suffered severe health complications after following advice from ChatGPT to consume sodium bromide, a chemical primarily used for pool sanitation. This individual was hospitalized for three weeks, experiencing paranoia and hallucinations, as detailed in a case study published in August 2023 in the Annals of Internal Medicine Clinical Cases.

Dr. Darren Lebl, research service chief of spine surgery at the Hospital for Special Surgery in New York, commented on the risks associated with AI medical advice. He pointed out that much of the information generated by AI lacks scientific backing. “About a quarter of them were made up,” he noted, emphasizing the potential dangers of relying on AI for medical guidance.

The findings of this study are further echoed by a recent survey conducted by Censuswide, which revealed that approximately 40 percent of respondents express trust in medical advice from AI bots like ChatGPT. This growing reliance on artificial intelligence for health-related queries raises significant questions about the future of medical consultation and the potential consequences for patient safety.

As technology continues to evolve, the balance between human expertise and AI capabilities remains a critical issue. The study underscores the necessity for better public education on the limitations of AI in healthcare, ensuring that individuals are equipped to discern between credible medical advice and potentially harmful misinformation.